15

Dec

Evaluation Partnerships and the Systems Evaluation Protocol: Program Modeling and “Aha!” Moments

In the final post of their series on the Systems Evaluation Protocol, Monica Hargraves and Jennifer Brown Urban show how the process of developing logic and pathway models can catalyze “Aha” moments about the real purposes and benefits of different parts of a program strategy.

—

To-do lists just get longer. Full plates get fuller. There’s increasing pressure to do more in less time, to catch two birds with one net. What if we told you that evaluation planning can help you do that – help you serve multiple goals at once? The key is to capture valuable side-benefits of the process.

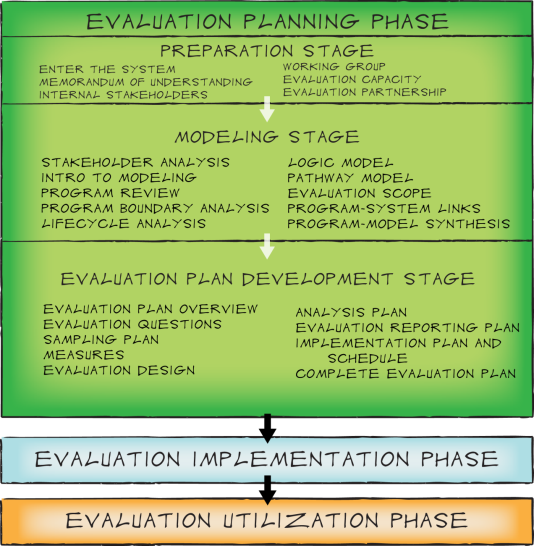

The Systems Evaluation Protocol (SEP) offers a stepwise approach to evaluation planning that has benefits that go beyond the evaluation planning process. Today’s post will focus on the “Aha!” Moments we’ve observed over and over again as practitioners work on the logic and pathway modeling steps of the Systems Evaluation Protocol (SEP) (see the first post in this series for background on the SEP). All of the Protocol steps are laid out in Figure 1.

The development and analysis of the logic and pathway models are distinctive features of the SEP process. The resulting program models, especially the pathway model, become the foundation for program analysis and strategic decision-making that inform the evaluation focus and methodology. What we have found in the course of facilitating the SEP with many program working groups, is that the process of developing these models has really valuable side-benefits. Just from the modeling alone, program staff come to better understand and develop a share vision of their program, are able to communicate more effectively with stakeholders about how the program works and what it accomplishes, and in turn are able to write better funding proposals and program reports.

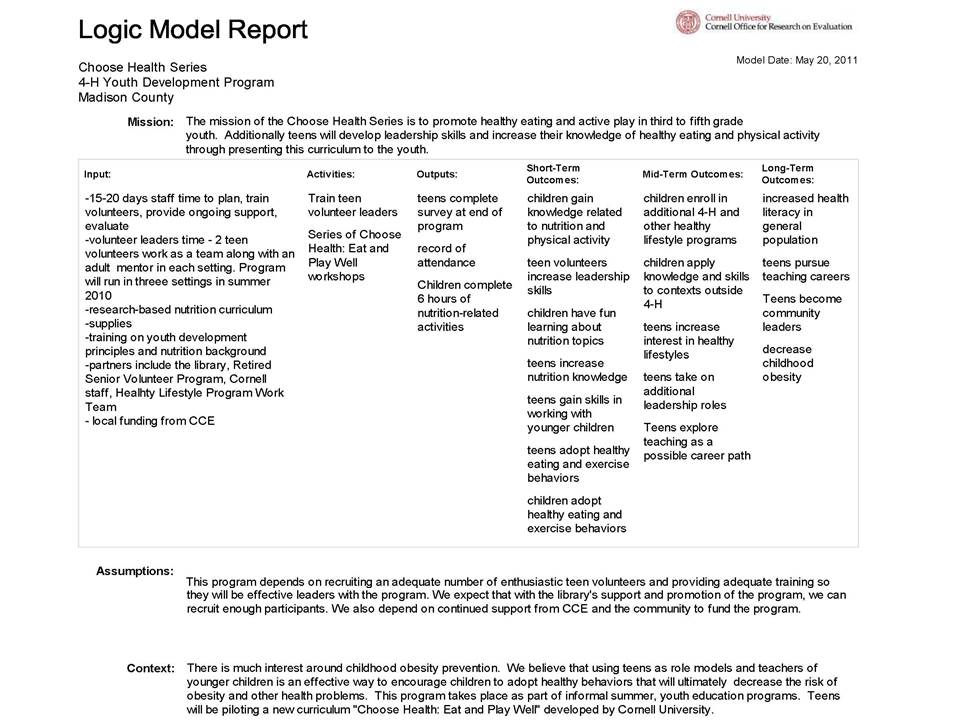

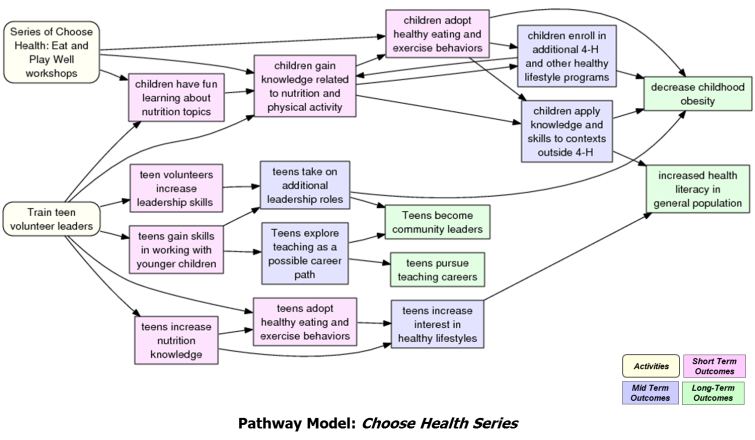

The pathway models are uniquely powerful in this discovery process. Pathway models are the visual counterpart to the more familiar columnar logic model. (See Figures 2 and 3 for examples of each type of model.) Pathway models incorporate the theory of change that underlies the program’s design and how it is believed to achieve its outcomes. The arrows tell the story of how the program works.

One way for Working Groups to develop pathway models is to create a logic model first, print it out, and then draw arrows from each activity to the associated short-term outcome(s) and then to the associated short- or mid-term, and so on. Alternatively, Working Groups can build the pathway model directly by writing activities and outcomes on index cards and then assembling them on poster paper on a table or wall and drawing in the arrows that represent the process of change they understand for their program. (Figure 4.)

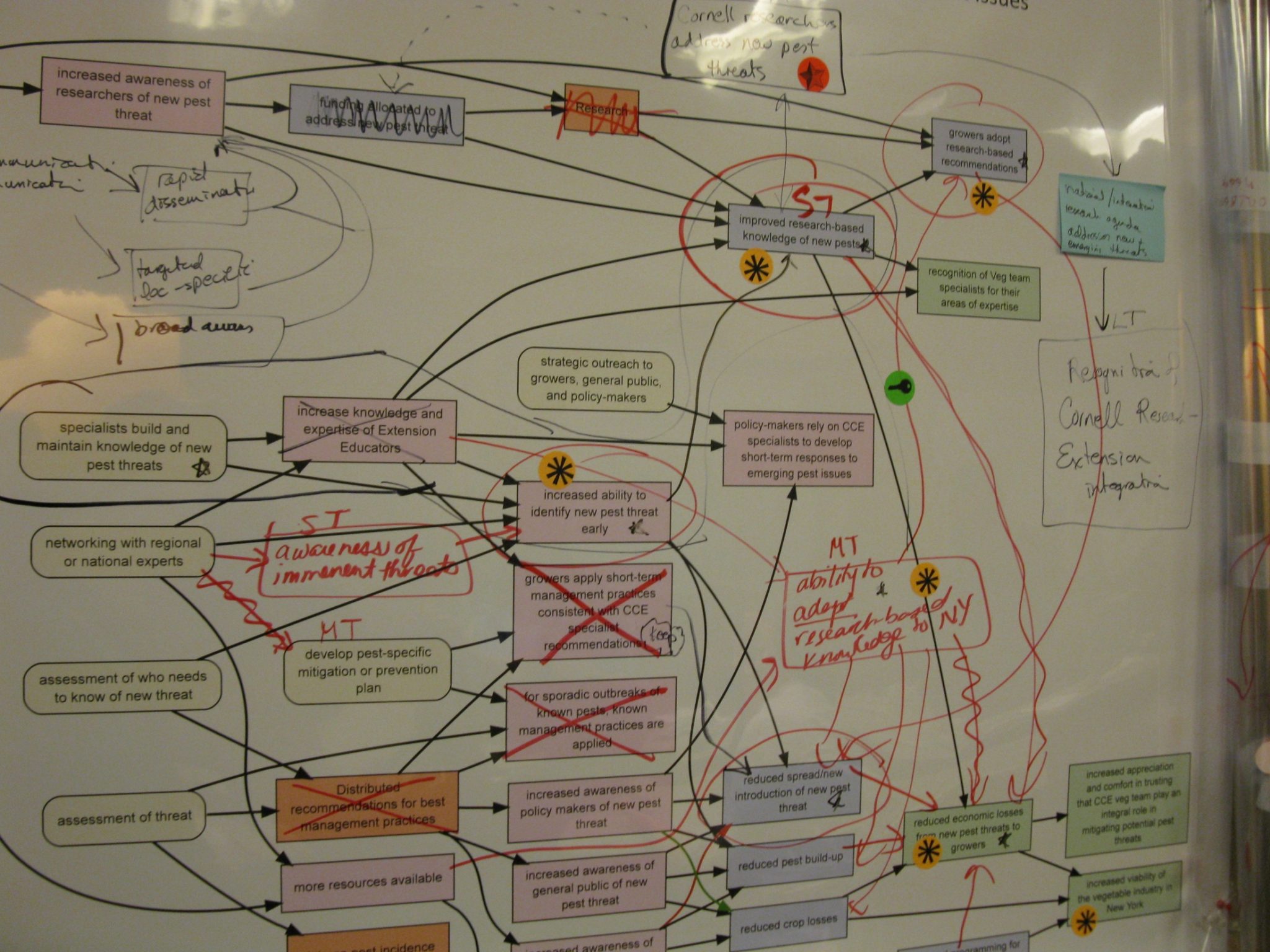

Of course, the process can get messy and complicated. (Figure 5)

But it’s that process – in the shared building of the model, and then iterations of commentary and feedback, all with the discipline of drawing a coherent theory of change – that new insights and shared understanding are brought to the surface.

In the course of our work with program practitioners we have observed the surprise benefits of this process so regularly that we named them “Aha!” Moments. The resulting insights have been valuable, as the following example illustrates:

“Aha!” Moment: Unearthing differences in implicit understanding of the program among practitioners. Inspire>Aspire is a character education program where students complete a poster template after engaging in reflective activities. The program reaches tens of thousands of youth each year. Teachers nominate the top posters for an awards ceremony. Nominated students attend a ceremony that includes talks given by leading inspiring figures (e.g., Olympic athletes). The awards ceremony reaches only a small fraction of youth who participate in the program. In the original iteration of the pathway model, one section of the model focused on the awards ceremony. The program developers had emphasized the awards ceremony because they committed a lot of time and resources to organizing and hosting the ceremony. When they examined the model closely, they identified several “dead-end” outcomes: A short-term outcome that didn’t connect to any medium- or long-term outcomes and two medium-term outcomes that didn’t connect to any long-term outcomes. Through the modeling exercise, they realized that the awards ceremony was not actually at the core of the program; this realization resulted in a shift in thinking regarding the distribution of resources and focus (e.g., more resources dedicated to developing teacher resources rather than the awards ceremony).

So what do these kinds of side-benefits have to do with managing heavy work-loads? These insights can contribute to several other responsibilities that program managers have, all of which take time: managing program resources effectively, communicating with stakeholders about how a program works and what really matters, developing persuasive grant proposals for future funding, team-building efforts to get program staff and volunteers all on the same page, and so on. Time and time again, participants in SEP Working Groups cited these kinds of outcomes as part of the value of the SEP process.

—

Many thanks to Monica and Jennifer for their contribution of this excellent series on the Systems Evaluation Protocol! To learn more about their work, please visit https://core.human.cornell.edu/research/systems/protocol/.

—

To-do lists just get longer. Full plates get fuller. There’s increasing pressure to do more in less time, to catch two birds with one net. What if we told you that evaluation planning can help you do that – help you serve multiple goals at once? The key is to capture valuable side-benefits of the process.

The Systems Evaluation Protocol (SEP) offers a stepwise approach to evaluation planning that has benefits that go beyond the evaluation planning process. Today’s post will focus on the “Aha!” Moments we’ve observed over and over again as practitioners work on the logic and pathway modeling steps of the Systems Evaluation Protocol (SEP) (see the first post in this series for background on the SEP). All of the Protocol steps are laid out in Figure 1.

The development and analysis of the logic and pathway models are distinctive features of the SEP process. The resulting program models, especially the pathway model, become the foundation for program analysis and strategic decision-making that inform the evaluation focus and methodology. What we have found in the course of facilitating the SEP with many program working groups, is that the process of developing these models has really valuable side-benefits. Just from the modeling alone, program staff come to better understand and develop a share vision of their program, are able to communicate more effectively with stakeholders about how the program works and what it accomplishes, and in turn are able to write better funding proposals and program reports.

The pathway models are uniquely powerful in this discovery process. Pathway models are the visual counterpart to the more familiar columnar logic model. (See Figures 2 and 3 for examples of each type of model.) Pathway models incorporate the theory of change that underlies the program’s design and how it is believed to achieve its outcomes. The arrows tell the story of how the program works.

One way for Working Groups to develop pathway models is to create a logic model first, print it out, and then draw arrows from each activity to the associated short-term outcome(s) and then to the associated short- or mid-term, and so on. Alternatively, Working Groups can build the pathway model directly by writing activities and outcomes on index cards and then assembling them on poster paper on a table or wall and drawing in the arrows that represent the process of change they understand for their program. (Figure 4.)

Of course, the process can get messy and complicated. (Figure 5)

But it’s that process – in the shared building of the model, and then iterations of commentary and feedback, all with the discipline of drawing a coherent theory of change – that new insights and shared understanding are brought to the surface.

In the course of our work with program practitioners we have observed the surprise benefits of this process so regularly that we named them “Aha!” Moments. The resulting insights have been valuable, as the following example illustrates:

“Aha!” Moment: Unearthing differences in implicit understanding of the program among practitioners. Inspire>Aspire is a character education program where students complete a poster template after engaging in reflective activities. The program reaches tens of thousands of youth each year. Teachers nominate the top posters for an awards ceremony. Nominated students attend a ceremony that includes talks given by leading inspiring figures (e.g., Olympic athletes). The awards ceremony reaches only a small fraction of youth who participate in the program. In the original iteration of the pathway model, one section of the model focused on the awards ceremony. The program developers had emphasized the awards ceremony because they committed a lot of time and resources to organizing and hosting the ceremony. When they examined the model closely, they identified several “dead-end” outcomes: A short-term outcome that didn’t connect to any medium- or long-term outcomes and two medium-term outcomes that didn’t connect to any long-term outcomes. Through the modeling exercise, they realized that the awards ceremony was not actually at the core of the program; this realization resulted in a shift in thinking regarding the distribution of resources and focus (e.g., more resources dedicated to developing teacher resources rather than the awards ceremony).

So what do these kinds of side-benefits have to do with managing heavy work-loads? These insights can contribute to several other responsibilities that program managers have, all of which take time: managing program resources effectively, communicating with stakeholders about how a program works and what really matters, developing persuasive grant proposals for future funding, team-building efforts to get program staff and volunteers all on the same page, and so on. Time and time again, participants in SEP Working Groups cited these kinds of outcomes as part of the value of the SEP process.

—

Many thanks to Monica and Jennifer for their contribution of this excellent series on the Systems Evaluation Protocol! To learn more about their work, please visit https://core.human.cornell.edu/research/systems/protocol/.